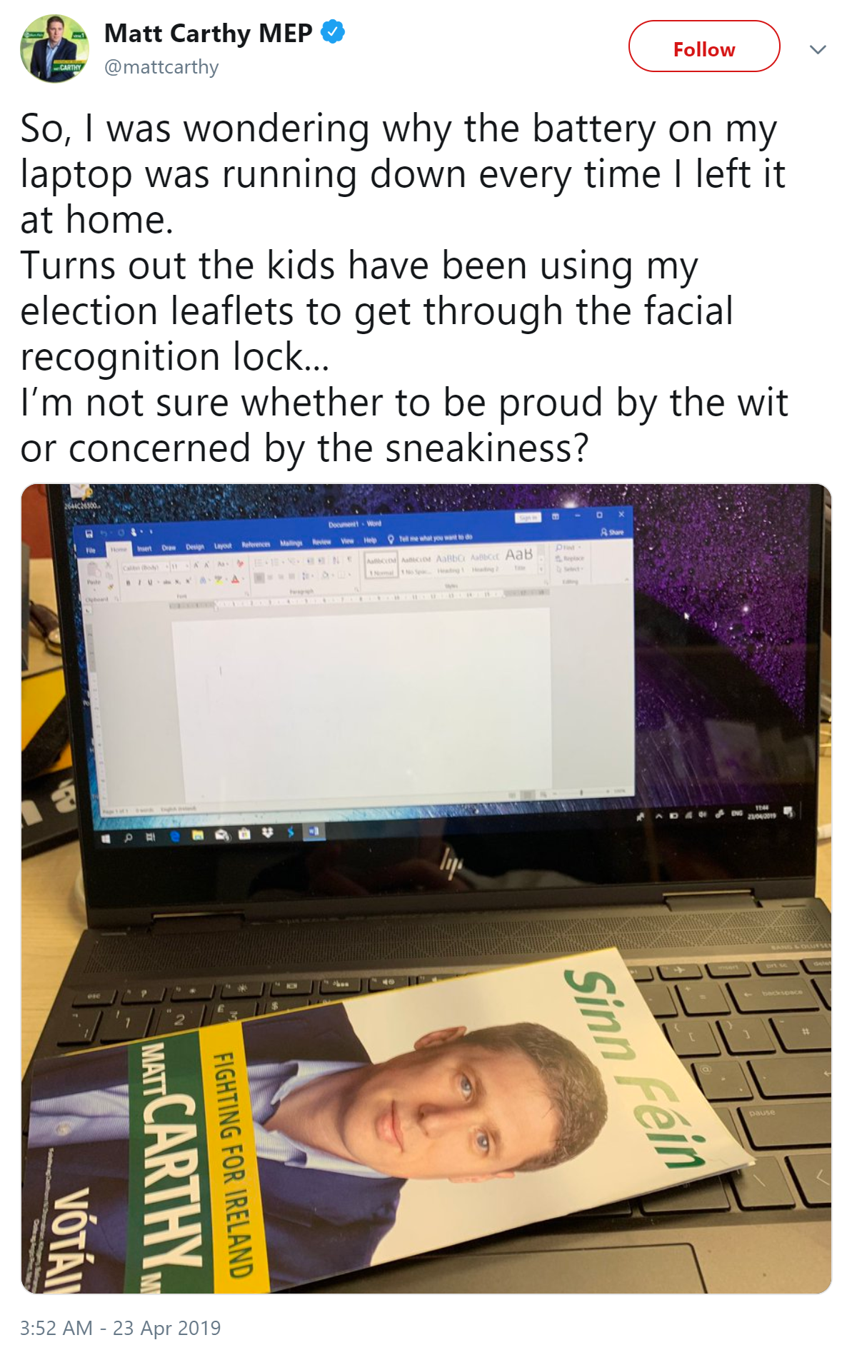

Browsing Twitter a bit back, I came across this Tweet by one Matt Carthy :

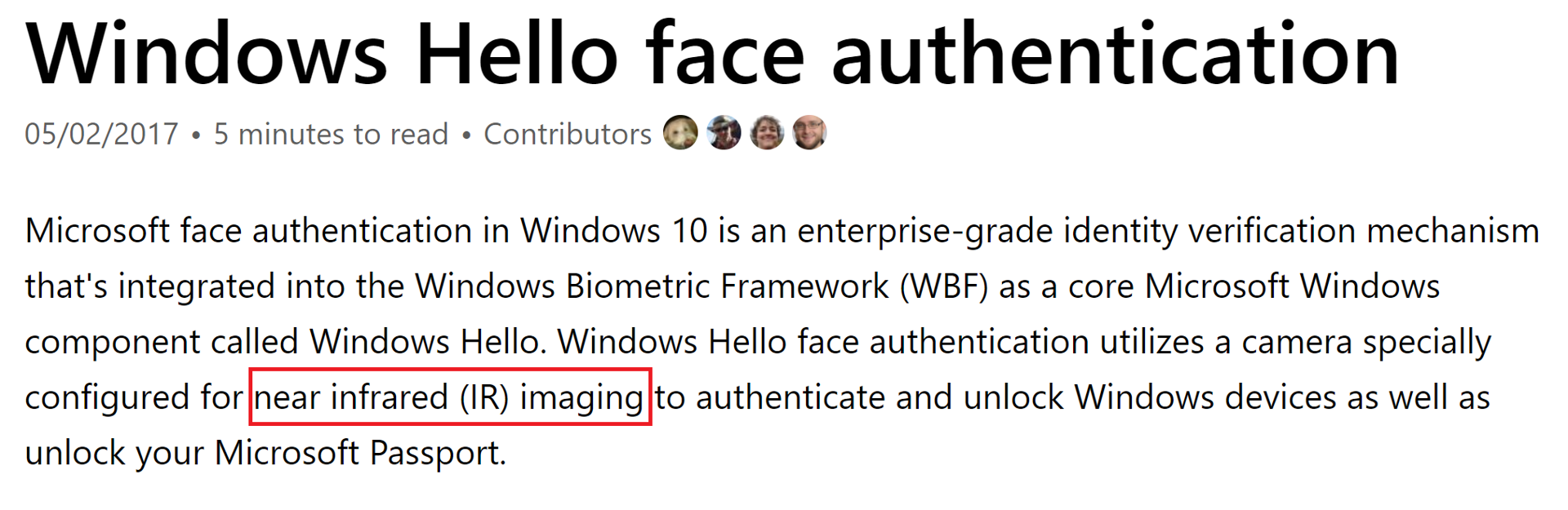

I’m just gonna jump straight in and call this fake. You can see the guy’s using Windows on his host. Therefore, it’s safe to assume he’s using Windows Hello to unlock his laptop. What’s a Windows Hello? Lets take a look at the official documentation.

This is rigidly enforced; Windows Hello cannot be enabled if you do not have an IR capable camera on your system. Why are they strict with the use of IR? Because it prevents people from doing simple attacks like the one purported above. The use of IR for facial image based authorisation systems is becoming more and more commonplace. A lot of phones tend to use it. So, how secure is it?

Some people are starting to play are with the viability of IR attacks. One paper of particular interest to me is Invisible Mask: Practical Attacks on Face Recognition with Infrared (Zhe Zhou, Di Tang, Xiaofeng Wang, Weili Han, Xiangyu Liu, Kehuan Zhang). In this paper, IR lights are used to try and imitate an enrolled person within a target system.

The general methodology is as follows:

-Compare the distance between the attacker’s face and enrolled members to find people that they sort of look like

-Generate an adversarial example based on a photo of the attacker, using lighting blooms to cause the attacker to appear more like their target

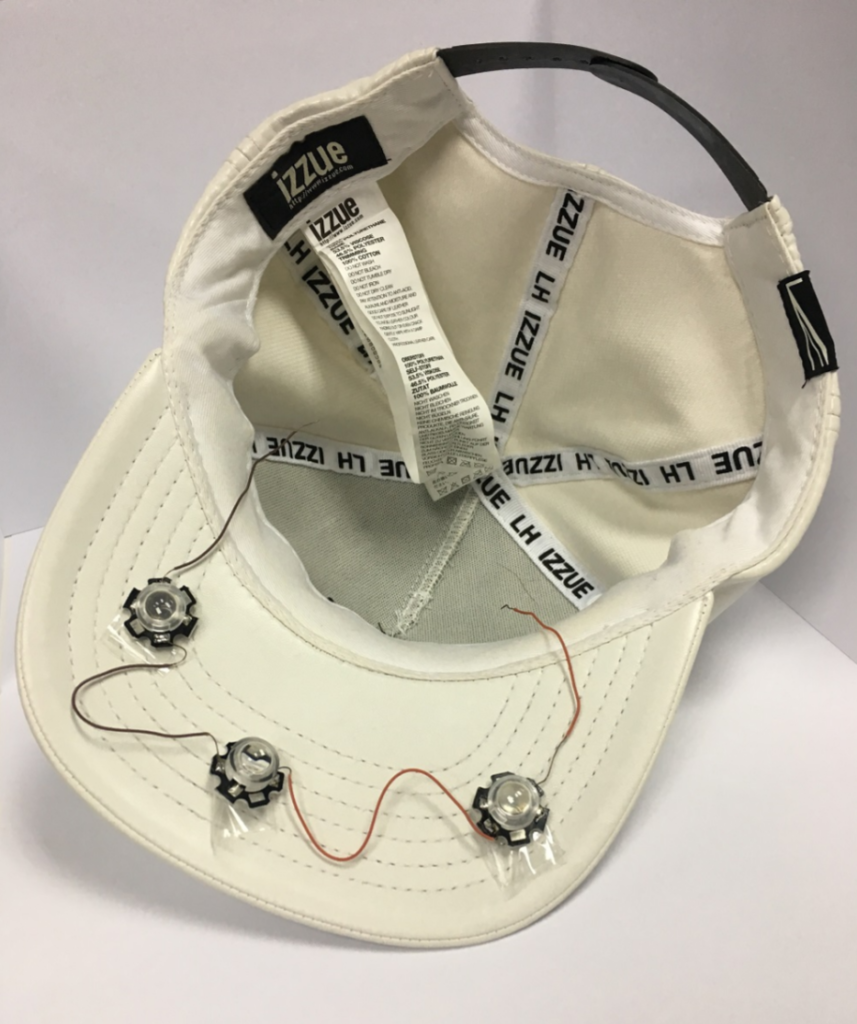

-Use hat-mounted IR lights to recreate this pattern on the attacker’s face

-???

-Profit

I quite like this paper because they brought it in to the real world. Check out this IR light hat:

It’s fairly inconspicuous!

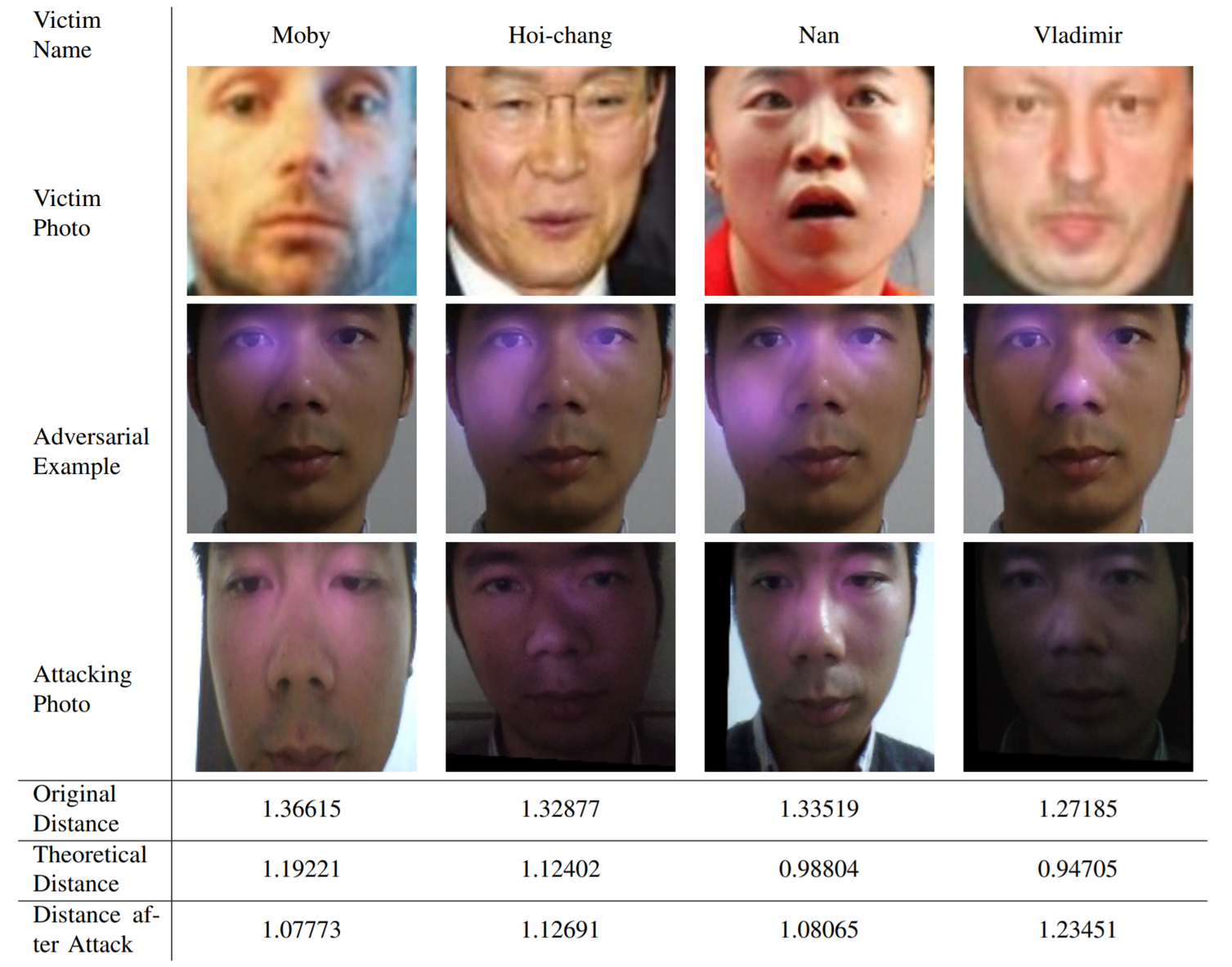

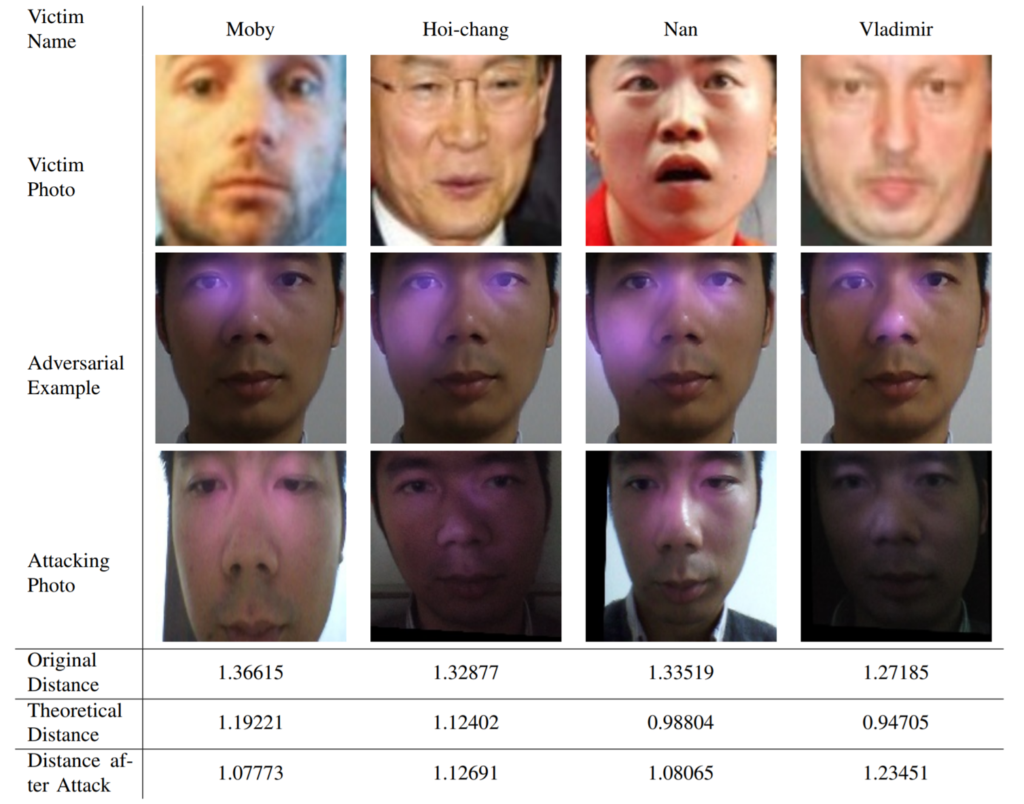

Here’s the results:

In the above image, the original distance refers to the distance between the original photo of the attacker, and their target. For this study they selected candidates within the range of 1.45, and 1.242. This is because 1.45 was found to be around the highest difference where the attack would still be viable, and 1.242 was found to correspond with a 99%+ likelihood of a match anyway. The theoretical distance is the distance of the adversarial example image from the target, and the distance after attack is the distance when shining actual IR lights on to the face.

With the fact in mind that 1.242 corresponds to a 99% likelihood match, the above results are pretty promising! The main limitation is however that you already need to look vaguely like the target. Still, finding someone to vaguely match your target shouldn’t be too hard. Or perhaps in future, this technique will become more refined and it will matter less.

As IR becomes more commonplace in surveillance systems (currently it’s used a lot for CCTV systems operating in low light conditions), I think that we’ll see more attacks that make use of IR to create false matches, rather than just to dazzle the camera systems.

Interested in learning more about dodging facial detection/recognition sytems? Read on here:

General concepts: https://www.vicharkness.co.uk/2019/01/20/the-art-of-defeating-facial-detection-systems-part-one/

Part two: Adversarial examples: https://www.vicharkness.co.uk/2019/01/27/the-art-of-defeating-facial-detection-systems-part-two-adversarial-examples/

Part three: The art community’s efforts: https://www.vicharkness.co.uk/2019/02/01/the-art-of-defeating-facial-detection-systems-part-two-the-art-communitys-efforts/

Be First to Comment