As part of my current degree, we get a few weeks for self-study in between modules. So, I’m going to try and write up notes in to blog posts for whomever may find them useful!

Finite-State Machines

Finite-state machines (FSMs) are a mathematical model of computation used in the design of both computer programs and sequential logic circuits. The basis of FSMs is that a machine can only be in one state at a time, known as the current state. It can change to another state when triggered by an event or condition, called a transition. Therefore, you can define your FSM in terms of a list of possible states and the conditions for state transitions in a diagram known as a state transition table.

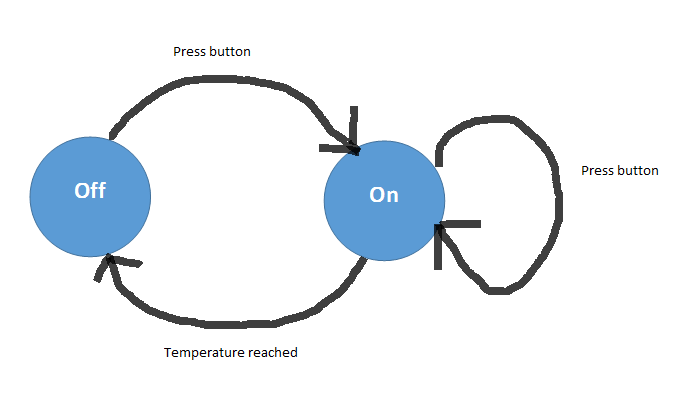

An example of this can be seen below in the state diagram for a kettle:

In this diagram, the kettle is in the state of “off” until the button is pressed. This causes it to transition to the state of “on”. While on, pressing the button will not cause the kettle to change state as it is already on. When the kettle reaches the desired temperature, it then automatically changes state to off. I don’t feel that “check temperature” needs to be added to this diagram, as it’s not really a transition, it just facilitates one. It’s existence seems to be adequately implied by the “temperature reached” transition.

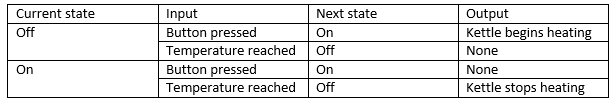

The state transition table for this model could be as follows:

FSMs can also be expressed in other ways, such as UML (Unified Modeling Language) state machines or SDL (Specification and Description Language) state machines.

Multilevel Security

Multilevel security (MLS), also known as Mandatory Access Control (MAC) is the use of computing-system based access control for information. Users are allowed access to different classifications of information depending on their security clearances. For example an intern in a company probably not be able to access Top Secret information, and a MLS system should help enforce this. These systems are no longer only used by the military, however. They could be used in for instance a shop in which you want clerks to be able to see sales data, but not edit it (beyond making sales).

When deciding which users should have which access rights, you need to consider your security policy. This should ideally be based on your threat model, and should lead in to your security mechanisms (which may be an implementation of MLS). Because of this, the security policy will often contain statements of which users may access which data. Ross Anderson puts forward three main aspects of a security policy:

- A security policy model: A summary of the protection properties which the system must have.

- A security target: A description of the protection methods that a given security implementation provides. This should relate to the contents of the security policy model (but may go beyond them), and acts as a list of requirements in the testing/evaluation of a product.

- A protection profile: Should be like a security target, but is not specific to the security situation at hand. This can be used to enable comparisons of different available products and versions.

Bell–LaPadula Model

The Bell–LaPadula Model, built on the concept of the finite state machine, was developed in the 1970s to address access control concerns of the US military. The Bell–LaPadula Model concentrates on data confidentiality and controlled access to classified information. In this model, the entities in the information system are divided into subjects and objects. A “secure state” for objects is defined. A system state is defined as secure only if the permitted access modes of subjects (users) to objects (files) are in accordance with the security policy. Data may only move from secure state to secure state in each state transition. Through this, it should in theory be impossible for data to transition in to an insecure state. The determination of secure takes in to account three considerations: the subject is authenticated, the subject needs to know, and the subject has formal access approval. If these conditions are all met, then a subject’s access to an object can be considered secure (and so the system is in a secure state). The clearance/classification scheme may be expressed in terms of a matrix. To implement this system, three security properties are used:

- The Simple Security Property: No read up. A subject cannot access an object at a security level higher than their own.

- The Star-Property (*-Property): No write down. A subject cannot write to an object at a lower security level than their own.

- The Discretionary Security Property: An access matrix used to specify Discretionary Access Control (discretionary in the sense that a subject with certain access permissions is capable of passing that permission on to any other subjects, unless restrained by mandatory access control).

It is worth noting that it is possible to implement both DAC and MAC, where DAC allows for one category of access controls (which users can transfer among each other), and MAC refers to a secondary category which imposes constraints upon the DAC category. Another point to note is that the Bell–LaPadula Model contains the concept of trusted subjects. Trusted subjects are not bound by the star-property, generally because they have proven to be trust-worthy within the organisation. These users could be a potential cause of breaches within the system. It is also possible in the Bell–LaPadula Model to use a “Strong Star-Property” rather than the usual Star-Property. In this property, subjects may only write to objects of a matching security level. This policy may be motivated by data integrity concerns, and is present in the Biba Model. A tranquility principle may be used in the Bell–LaPadula Model. This states that the classification of a subject or object does not change when it is being referenced. This can be implemented as the “principle of strong tranquility” in which security levels do not change during the normal operation of the system, or as the “principle of weak tranquility” in which security levels may never change in such a way as to violate a defined security policy. The principle of weak tranquility allows for systems to observe the “principle of least privilege”. In this, objects/processes/users are only able access the information and resources that are necessary for a legitimate purpose. They may then accumulate higher security levels/access permissions as needed.

Biba (Integrity) Model

The Biba Integrity Model was also developed in the 1970s, and concentrates on the protection of data integrity. Objects and subjects are grouped in to ordered levels of integrity. Subjects may not corrupt objects in the levels ranked above themselves, or be corrupted by objects at a lower level than the subject. For example, a person could be prevented from reading The Daily Mail when forming an opinion on a matter. The security rules defined by the Biba model are:

- The Simple Security Property: No read down. Subjects at a given level of integrity must not read an object at a lower integrity level.

- The Star-Property (*-Property): No write up. Subjects at a given level of integrity must not write to any object at a higher level of integrity.

- Invocation Property: A process from below cannot request higher access.

Clark–Wilson Model

The Clark–Wilson Model was developed in the 1980s also deals primarily with information integrity, which it aims to maintain by preventing corruption of objects in systems due to either error or malicious actions. It makes use of an integrity policy to describe how the data items in a system should be kept valid when transitioning from one state to the next, and specifies the capabilities of various principles in a system. The model defines enforcement rules and certification rules. These rules define data items and processes that provide the basis for the integrity policy. The core of the Clark–Wilson Model is the notion of a transaction. A well-formed transaction is a series of operations that transition the system from one state to another. The integrity policy enforces the integrity of the transactions. The principle of “separation of duty” requires that the certifier of a transaction and the implementer be different entities, to prevent abuse.

The Clark–Wilson Model contains a number of basic constructs that represent both the objects and the processes that operate on the objects. The key object type in the Clark–Wilson Model is the Constrained Data Item (CDI). An Integrity Verification Procedure (IVP) ensures that all CDIs in a system are valid in a given state. Transactions enforcing the integrity policy are represented by Transformation Procedures (TPs). A TP takes as input a CDI or an Unconstrained Data Item (UDI) (representing system input, such as that provided by a user) and produces a CDI. A TP must be used to make valid state transitions. TPs must guarantee via certification that it transforms all possible values of a UDI to a “safe” CDI. The relationship between a user, a set of programs (TPs) and a set of objects (CDIs/UDIs) is known as a Clark-Wilson triple. A user capable of certifying such a relationship should not be able to execute the programs specified in that relation.

The model consists of two types of rules: Certification Rules (C) and Enforcement Rules (E). These make nine rules to ensure external/internal integrity of objects:

- C1: All IVPs must ensure that the CDIs are in a valid state when the IVP is ram

- C2: A TP must transform CDIs from one valid state to another

- C3: Assignments of TPs to users must satisfy “separation of duty”

- C4: The operations of all TPs must be logged with enough information to reconstruct the operation

- C5: All TPs executing on UDIs must result in a valid CDI. UDIs can be accepted (converted to a CDI) or rejected

- E1: Only certified TPs can manipulate CDIs, and so a list of certified relations must be kept

- E2: The system must associate a user which each TP and set of CDIs. The TP may access the CDI on behalf of a user if it is “legal”

- E3: The identity of each user attempting to execute a TP must be authenticated

- E4: Only the certifier of a TP may change the list of entities associated with that TP

Brewer and Nash Model/Chinese Wall Model

The Brewer and Nash/Chinese Wall Model was first described in the 1980s, citing the influence of the Clark-Wilson Model. In the financial sector, a Chinese Wall is a method employed most commonly in investment banks, between the corporate-advisory areas and the brokering department to prevent issues such as conflicts of interest and insider trading. The Brewer and Nash Model adopts an information-flow model to determine which pieces of information a specific user should be allowed to view, depending on what other information the user has previously accessed. In keeping with the Bell-LaPadula Model, the Brewer and Nash Model designates analysts as subjects, and information as objects. Security labels are used which are made up two indicators: the company dataset, and the conflict of interest class. When an analyst accesses a company’s dataset, the conflict of interest class dynamically determines which other datasets the analyst should be allowed to access. If a dataset has a conflict of interest class denoting a company in competition with a previously accessed dataset, the system does not allow the analyst to access the new dataset. Write access is only permitted when a conflict of interest is not present.

Non-Interference Models

Non-Interference is a strict multilevel security policy model, developed in the 1980s. These were designed to address potential flaws in the aforementioned models such as Covert Channels. Covert channels are a type of security attack which create the capability to transfer information between systems that are not allowed to communicate according to the computer security policy. This tends to be done in very subtle ways, such as through heat. Non-Interference Models work by ensuring that all low sensitivity inputs produce the same low sensitivity outputs, regardless of what high security level inputs there may be. If a low security level user is working on a machine, it will respond in the exact same manner (on these low security level inputs) as if a high security user was working on sensitive data. Low level users should not be able to acquire any information about the activities of the high level user. It is very difficult to verify that a system is non-interference compliant, and few may be commercially available.

[…] Security models for information security […]